Sunday, November 18, 2007

Testing Practices

According to Martin Fowler, "Whenever you are tempted to type something into a print statement or a debugger expression, write it as a test instead." At first you will find that you have to create a new fixtures all the time, and testing will seem to slow you down a little. Soon, however, you will begin reusing your library of fixtures and new tests will usually be as simple as adding a method to an existing TestCase subclass.

You can always write more tests. However, you will quickly find that only a fraction of the tests you can imagine are actually useful. What you want is to write tests that fail even though you think they should work, or tests that succeed even though you think they should fail. Another way to think of it is in cost/benefit terms. You want to write tests that will pay you back with information.

Here are a couple of the times that you will receive a reasonable return on your testing investment:

During Development:

When you need to add new functionality to the system, write the tests first. Then, you will be done developing when the test runs.

During Debugging:

When someone discovers a defect in your code, first write a test that will succeed if the code is working. Then debug until the test succeeds.

One word of caution about your tests. Once you get them running, make sure they stay running. There is a huge difference between having your suite running and having it broken. Ideally, you would run every test in your suite every time you change a method. Practically, your suite will soon grow too large to run all the time. Try to optimize your setup code so you can run all the tests. Or, at the very least, create special suites that contain all the tests that might possibly be affected by your current development. Then, run the suite every time you compile. And make sure you run every test at least once a day: overnight, during lunch, during one of those long meetings.

Sunday, September 30, 2007

Web Test tools

Some important load and performance test tools with respect to the web applications are listed in this article. Their core features are also included to get familiar with their capabilities.

Load and performance test tools

Curl-Loader:

This tool is an open source tool written in ‘C’. It is useful to test the load and behavior of large number of Ftp/Ftps and Http/Https clients, each with its own source IP-address. Curl-Loader uses the real c-written client protocol stacks like HTTP and FTP stacks of libcurl and TSL/SSL of openssl. Activities for each virtual client is logged, all the logged data includes the following information: resolving, connection establishment, sending of requests, receiving responses, headers and data received/sent, errors from network, TLS/SSL and application (HTTP, FTP) level events and errors.

OpNet LoadScaler:

It is a load test tool presented by OpNet Technologies. It performed some useful functions like Create tests without programming; generate loads against web applications, and other services including Web Services, FTP, and Email. Record end-user browser activity in the OPNET TestCreatorTM authoring environment to automatically generate test scripts in industry-standard JavaScript. Modify, extend and debug tests with the included JavaScript editor. Alternatively, drag and drop icons onto the test script tree. No knowledge of a scripting language is required to customize test scripts.

Stress Tester:

It is a load and performance testing tool for web applications. Advanced user journey modeling, scalable load, system resources monitors and results analysis. No scripting required. Suitable for any Web, JMS, IP or SQL Application. OS independent.

Proxy Sniffer:

It is a stress and load testing tool having some nice features.

. HTTP/S Web Session Recorder that can be used with any web browser.

. Recordings can then be used to automatically create optimized Java-based load test programs.

. Automatic protection from "false positive" results by examining actual web page content.

. Detailed Error Analysis using saved error snapshots and real-time statistics.

Testing master:

Testing master is a strong load testing tool which has the following characteristics.

. IP spoofing

. Multiple simultaneous test cases

. Website testing features for sites with dynamic content and secure HTTPS pages

NeoLoad:

It is a web load testing tool with user friendly graphical interface and can design complex cases to handle real world applications. It has the following list of features:

. Data replacement

. Data extraction and SOAP support

. System monitoring (Windows, Linux, IIS, Apache, Web Logic, Web sphere...)

. SSL recording

. PDF/HTML/Word reporting

. IP spoofing

. It is multi platform and can run on Windows, Linux, and Solaris

QTest:

This tool is used for web load testing, it has the following characteristics

. Cookies are managed natively

. Shorten the script modeling stage

. HTML and XML parser

. Option of developing custom monitors using supplied APIs

. Allowing display and retrieval of any element from a HTML page or an XML flux in test scripts

LoadDriver:

A very strong testing tool equipped with some modern day technologies. It has the following capabilities:

. Rather than simulating the browsers tt directly drives multiple instances of MSIE

. It supports browser side scripts along with HTTP 1.0/1.1, HTTPS, cookies, cache, and Windows authentication

. Data can be taken from text files or custom ODBC data source and passed to the tests, for individual userID, password, page to start, think times, data to enter, links to click, cache, initial cache state

Site Tester1:

It is a load testing tool which require JDK1.2 or higher to run. Its functions includes HTTP1.0/1.1 compatible requests, POST/GET methods, cookies, running in multi-threaded or single-threaded mode, definition of requests, jobs, procedures and tests, keeps and reads XML formatted files for test definitions and test logs, generates various reports in HTML format

Microsoft WCAT load test tool:

A very efficient tool from Microsoft Corporation which is responsible for the load testing of Internet Information services (IIS) servers.

WebPerformance Load Tester:

It is a load tester having some attractive features listed below:

. It supports all browsers and web servers.

. Records and allows viewing of exact bytes flowing between browser and server

. No scripting required.

. Modem simulation allows each virtual user to be bandwidth limited.

. It can automatically handle variations in session-specific items such as cookies, usernames, passwords, IP addresses, and any other parameter to simulate multiple virtual users

. It can be used on Windows, Linux, UNIX and Solaris platforms.

Wednesday, July 25, 2007

Selecting an Object Order Preference

These recognition methods are saved as arguments in script commands so that Robot can correctly identify the same objects during playback.

| Object Order Preference | Recognition Method Order | Comments |

| <Default> | Object Name Label and/or Text Index ID | Index comes before ID. In some environments, such as PowerBuilder and Visual Basic, the ID changes each time the developer creates an executable file and is therefore not a good recognition method. |

| C++ Recognition Order | Object Name Label and/or Text ID | Index ID comes before index. In some environments, suchas C++, the ID does not usually change and is therefore a good recognition method. |

The

Note

Settings in the Object Recognition Order tab of the GUI Record Options dialog box do not affect HTML recording. When recording against HTML, Robot always uses HTMLID, if available, and then name, text, and index recognition and ignores any settings in the Object Recognition Order tab.

To change the object order preference:

- Open the GUI Record Options dialog box.

- Click the Object Recognition Order tab.

- Select a preference in the Object order preference list.

- Click OK or change other options.

Note

The object order preference is specific to each user. For example, you can record with C++ preferences while another user is recording with

Setting GUI Recording Options

To set the GUI recording options:

- Open the GUI Record Options dialog box by doing one of the following:

- Before you start recording, click Tools > GUI Record Options.

- Start recording by clicking the Record GUI Script button on the toolbar. In the Record GUI dialog box, click Options.

- Set the options on each tab.

- Click OK.

Naming Scripts Automatically

Robot can assist you in assigning names to scripts with its script autonaming feature. Autonaming inserts your specified characters into the Name box of a new script and appends a consecutive number to the prefix.

This is a useful feature if you are recording a series of related scripts and want to identify their relationship through the prefix in their names. For example, if you are testing the menus in a Visual Basic application, you might want to have every script name start with VBMenu.

- Open the GUI Record Options dialog box.

- In the General tab, type a prefix in the Prefix box. Clear the box if you do not want a prefix. If the box is cleared, you need to type a name each time you record a new script.

- Click OK or change other options.

The next time you record a new script, the prefix and a number appear in the Name box of the Record GUI dialog box.

For example the autonaming prefix is Test. When you record a new script, Test7 appears in the Name box because six other scripts begin with Test.

If you change the script autonaming prefix by clicking Options in the Record GUI dialog box, changing the prefix, and then clicking OK, the name in the Name box changes immediately.

Controlling How Robot Responds to Unknown Objects

During recording, Robot recognizes all standard Windows GUI objects that you click, such as check boxes and list boxes. Each of these objects is associated with one of a fixed list of object types. The association of an object with an object type is generally based on the class name of the window associated with the object.

Robot also recognizes many custom objects defined by IDEs that Robot supports, such as Visual Basic, Oracle Forms, Java, and HTML. For example, if you click a Visual Basic check box, Robot recognizes it as a standard Windows check box. This mapping is based on the object’s Visual Basic assigned class name of ThunderCheckBox.

These built-in object mappings are delivered with Robot and are available to all users no matter which project they are using. During recording, you might click an object that Robot does not recognize. In this case, Robot’s behavior is controlled by a recording option that you set. You can have Robot do either of the following:

- Open the Define Object dialog box, so that you can map the object to a known object type.

Mapping an object to an object type permanently associates the class name of the object’s window with that object type, so that other objects of that type are recognized. - Automatically map unknown objects encountered while recording with the Generic object type. This permanently associates the class name of the unknown object’s window with the Generic object type.

This is a useful setting if you are testing an application that was written in an IDE for which Robot does not have special support and which therefore might contain many unknown objects. When an object is mapped to the Generic object type, Robot can test a basic set of its properties, but it cannot test the special properties associated with a specific object type. Robot also records the object’s x,y coordinates instead of using the more reliable object recognition methods to

identify the object.

These custom object mappings are stored in the project that was active when the mappings were created.

To control how Robot behaves when it encounters an unknown object during recording:

- Open the GUI Record Options dialog box.

- In the General tab, do one of the following:

- Select Define unknown objects as type "Generic" to have Robot automatically associate unknown objects encountered while recording with the Generic object type.

- Clear Define unknown objects as type "Generic" to have Robot suspend recording and open the Define Object dialog box if it encounters an unknown object during recording. Use this dialog box to associate the object with an object type.

- Click OK or change other options.

Thursday, July 12, 2007

Enabling IDE Applications for Testing

To successfully test the objects in Oracle Forms, HTML, Java, C++, Delphi, and Visual Basic 4.0 applications, you need to enable the applications as follows before you start recording your scripts:

- Oracle Forms – Install the Rational Test Enabler for Oracle Forms. Run the Enabler to have it add the Rational Test Object Testing Library and three triggers to the .fmb files of the application.

- HTML – While recording or editing a script, use the Start Browser toolbar button to start Internet Explorer or Netscape Navigator from Robot. This loads the Rational ActiveX Test Control, which lets Robot recognize Web-based objects.

- Java – Run the Java Enabler to have it scan your hard drive for Java environments such as Web browsers and Sun JDK installations that Robot supports. The Java Enabler only enables those environments that are currently installed.

- C/C++ – To test the properties and data of ActiveX controls in your applications, install the Rational ActiveX Test Control. This is a small, nonintrusive custom control that acts as a gateway between Robot and your application. It has no impact on the behavior or performance of your application and is not visible at runtime. Manually add the ActiveX Test Control to each OLE container (Window) in your application.

- Visual Basic 4.0 – Install the Rational Test Enabler for Visual Basic. Attach the Enabler to Visual Basic as an add-in. Have the Enabler add the Rational ActiveX Test Control to every form in the application. This is a small, nonintrusive custom control that acts as a gateway between Robot and your application.

- Delphi – Install the Rational Object Testing Library for Delphi and the Rational Test Delphi Enabler. Run the Enabler, and then recompile your project to make it Delphi testable.

You can install the Enablers and the ActiveX Test Control from the Rational Software Setup wizard.

Thursday, June 28, 2007

Recording GUI Scripts in Rational Robot

When you record a GUI script, Robot records:

- Your actions as you use the application-under-test. These user actions include keystrokes and mouse clicks that help you navigate through the application.

- Verification points that you insert to capture and save information about specific objects. A verification point is a point in a script that you create to confirm the state of an object across builds. During recording, the verification point captures object information and stores it as the baseline. During playback, the verification point recaptures the object information and compares it to the baseline.

The recorded GUI script establishes the baseline of expected behavior for the application-under-test. When new builds of the application become available, you can play back the script to test the builds against the established baseline in a fraction of the time that it would take to perform the testing manually.

The Recording Workflow

Typically, when you record a GUI script, your goal is to:

- Record actions that an actual user might perform (for example, clicking a menu command or selecting a check box).

- Create verification points to confirm the state of objects across builds of the application-under-test (for example, the properties of an object or the text in an entry field).

Before You Begin Recording

You should plan to use Robot at the earliest stages of the application development and testing process. If any Windows GUI objects such as menus and dialog boxes exist within the initial builds of your application, you can use Robot to record the corresponding verification points.

Consider the following guidelines before you begin recording:

- Establish predictable start and end states for your scripts.

- Set up your test environment.

- Create modular scripts.

- Create Shared Projects with UNC.

These guidelines are described in more detail in the following sections.

Establishing Predictable Start and End States for Scripts

By starting and ending the recording at a common point, scripts can be played back in any order, with no script being dependent on where another script ends. For example, you can start and end each script at the Windows desktop or at the main window of the application-under-test.

Setting Up Your Test Environment

Any windows that are open, active, or displayed when you begin recording should be open, active, or displayed when you stop recording. This applies to all applications, including Windows Explorer, e-mail, and so on.

Robot can record the sizes and positions of all open windows when you start recording, based on the recording options settings. During playback, Robot attempts to restore windows to their recorded states and inserts a warning in the log if it cannot find a recorded window.

In general, close any unnecessary applications before you start to record. For stress testing, however, you may want to deliberately increase the load on the test environment by having many applications open.

Creating Modular Scripts

Rather than defining a long sequence of actions in one GUI script, you should define scripts that are short and modular. Keep your scripts focused on a specific area of testing — for example, on one dialog box or on a related set of recurring actions.

When you need more comprehensive testing, modular scripts can easily be called from or copied into other scripts. They can also be grouped into shell scripts, which are top-level, ordered groups of scripts.

The benefits of modular scripts are:

- They can be called, copied, or combined into shell scripts.

- They can be easily modified or rerecorded if the developers make intentional changes to the application-under-test.

- They are easier to debug.

Creating Modular Scripts with UNC

When projects containing GUI or Manual scripts are to be shared, create the project in a shared directory using the Uniform Naming Convention (UNC). UNC paths are required for GUI test scripts and Manual test scripts that are run on Agent computers.

Tuesday, June 19, 2007

Hosting Robot/Applications on a Terminal Server

- Citrix MetaFrame (WIN2K)/Citrix MetaFrame client

- Microsoft Terminal Server (WIN2K)/Microsoft Terminal Server client

- Windows 2000 Server

- Windows Terminal Server (Windows NT4)

Starting Robot and Its Components

Before you start using Robot, you need to have:

- Rational Robot installed.

- A Rational project.

Logging On

To log on:

- From Start > Programs > Rational product name, start Rational Robot or one of its components to open the Rational Login dialog box.

Opening Other Rational Products and Components

Once you are logged onto a Robot component, you can start other products and components from either:

Some components also start automatically when you perform certain functions in another component.

Wednesday, June 13, 2007

Testing Applications with Rational TestFactory

TestFactory is integrated with Robot and its components to provide a full array of tools for team testing under Windows NT 4.0, Windows XP, Windows 2000, and Windows 98.

With TestFactory, you can:

- Automatically create and maintain a detailed map of the application-under-test.

- Automatically generate both scripts that provide extensive product coverage and scripts that encounter defects, without recording.

- Track executed and unexecuted source code and report its detailed findings.

- Shorten the product testing cycle by minimizing the time invested in writing navigation code.

- Play back Robot scripts in TestFactory to see extended code coverage information and to create regression suites; play back TestFactory scripts in Robot to debug them.

Managing Defects with Rational ClearQuest

Rational ClearQuest is a change-request management tool that tracks and manages defects and change requests throughout the development process. With ClearQuest, you can manage every type of change activity associated with software development, including enhancement requests, defect reports, and documentation modifications.

With Robot and ClearQuest, you can:

- Submit defects directly from the TestManager log or SiteCheck.

- Modify and track defects and change requests.

- Analyze project progress by running queries, charts, and reports.

Collecting Diagnostic Information During Playback

Use the Rational diagnostic tools to perform runtime error checking, profile application performance, and analyze code coverage during playback of a Robot script.

- Rational Purify is a comprehensive C/C++ runtime error checking tool that automatically pinpoints runtime errors and memory leaks in all components of an application, including third-party libraries, ensuring that code is reliable.

- Rational Quantify is an advanced performance profiler that provides application performance analysis, enabling developers to quickly find, prioritize, and eliminate performance bottlenecks within an application.

- Rational PureCoverage is a customizable code coverage analysis tool that provides detailed application analysis and ensures that all code has been exercised, preventing untested code from reaching the end user.

Performance Testing with Rational TestManager

Rational TestManager is a sophisticated tool for automating performance tests on client/server systems. A client/server system includes client applications accessing a database or application server, and browsers accessing a Web server.

Performance testing uses Rational Robot and Rational TestManager. Use Robot to record client/server conversations and store them in scripts. Use TestManager to schedule and play back the scripts. During playback, TestManager can emulate hundreds, even thousands, of users placing heavy loads and stress on your database and Web servers.

Doing performance testing with TestManager, you can:

- Find out if your system-under-test performs adequately.

- Monitor and analyze the response times that users actually experience under different usage scenarios.

- Test the capacity, performance, and stability of your server under real-world user loads.

- Discover your server’s break point and how to move beyond it.

Managing Requirements with Rational RequisitePro

Rational RequisitePro is a requirements management tool that helps project teams control the development process. RequisitePro organizes your requirements by linking Microsoft Word to a requirements repository and providing traceability and change management throughout the project lifecycle.

Using RequisitePro, you can:

- Customize the requirements database and manage multiple requirement types.

- Prioritize, sort, and assign requirements.

- Control feature creep and ensure software quality.

- Track what changes have been made, by whom, when, and why.

- Integrate with other tools, including Rose, ClearCase, Rational Unified Process., and SoDA.

Using Robot with Other Rational Products

Planning and Managing Tests in TestManager

Rational TestManager is the one place to manage all testing activities – planning, design, implementation, execution, and analysis. TestManager ties testing with the rest of the development effort, joining your testing assets and tools to provide a single point from which to understand the exact state of your project.

- Plan Test. The activity of test planning is primarily answering the question, "What do I have to test?" When you complete your test planning, the result is a test plan that defines what to test. In TestManager, a test plan can contain test cases. The test cases can be organized based on test case folders.

- Design Test. The activity of test designing is primarily answering the question, "How am I going to do a test?" When you complete your test designing, you end up with a test design that helps you understand how you are going to perform the test case. In TestManager, you can design your test cases by indicating the actual steps that need to occur in that test. You also specify the preconditions, postconditions, and acceptance criteria.

- Implement Test. The activity of implementing your tests is primarily creating reusable scripts. In TestManager, you can implement your tests by creating manual scripts. You can also implement automated tests by using Rational Robot. You can extend TestManager through APIs so that you can access your own implementation tools from TestManager. Because of this extensibility, you can implement your tests by building scripts in whatever tools are appropriate in your situation and organization.

- Execute Tests. The activity of executing your tests is primarily running your scripts to make sure that the system functions correctly. In TestManager, you can run any of the following: (1) an individual script, which runs a single implementation; (2) one or more test cases, which run the implementations of the test cases; (3) a suite, which runs test cases and their implementations across multiple computers and users.

- Evaluate Tests. The activity of evaluating tests is determining the quality of the system-under-test. In TestManager, you can evaluate tests by examining the results of test execution in the test log, and by running various reports.

Planning and managing tests is only one part of Rational TestManager. You also use TestManager to view the logs created by Robot.

Managing Intranet and Web Sites with SiteCheck and Robot

- Visualize the structure of your Web site and display the relationship between each page and the rest of the site.

- Identify and analyze Web pages with active content, such as forms, Java, JavaScript, ActiveX, and Visual Basic Script (VBScript).

- Filter information so that you can inspect specific file types and defects, including broken links.

- Examine and edit the source code for any Web page, with color-coded text.

- Update and repair files using the integrated editor, or configure your favorite HTML editor to perform modifications to HTML files.

- Perform comprehensive testing of secure Web sites. SiteCheck provides Secure Socket Layer (SSL) support, proxy server configuration, and support for multiple password realms.

Robot has two verification points for use with Web sites:

- Use the Web Site Scan verification point to check the content of your Web site with every revision and ensure that changes have not resulted in defects.

- Use the Web Site Compare verification point to capture a baseline of your Web site and compare it to the Web site at another point in time.

The following figures show the types of defects you can scan for using a Web Site verification point and the list of defects displayed in SiteCheck.

Monday, June 11, 2007

Creating Datapools

If a script sends data to a server during playback, consider using a datapool as the source of the data. By accessing a datapool, a script transaction that is executed multiple times during playback can send realistic data and even unique data to the server each time. If you do not use a datapool, the same data (the exact data you recorded) is sent each time the transaction is executed.

TestManager is shipped with many commonly used data types, such as cities, states, names, and telephone area codes. In addition, TestManager lets you create your own data types.

When creating a datapool, you specify the kinds of data (called data types) that the script will send — for example, customer names, addresses, and unique order numbers or product names. When you finish defining the datapool, TestManager automatically generates the number of rows of data that you specify.

Analyzing Results in the Log and Comparators

You use TestManager to view the logs that are created when you run scripts and schedules.

Use the log to:

View the results of running a script, including verification point failures, procedural failures, aborts, and any additional playback information. Reviewing the results in the log reveals whether each script and verification point passed or failed.

Use the Comparators to:

Analyze the results of verification points to determine why a script may have failed. Robot includes four Comparators:

- Object Properties Comparator

– Text Comparator

– Grid Comparator

– Image Comparator

When you select the line that contains the failed Object Properties verification point and click View > Verification Point, the Object Properties Comparator opens, as shown in the following figure. In the Comparator, the Baseline column shows the original recording, and the Actual column shows the playback that failed. Compare the two files to determine whether the difference is an intentional change in the application or a defect.

Wednesday, June 6, 2007

Developing Tests in Robot

Use Robot to:

- Perform full functional testing. Record and play back scripts that navigate through your application and test the state of objects through verification points.

- Perform full performance testing. Use Rational Robot and Rational. TestManager together to record and play back sessions that help you determine whether a multiclient system is performing within user-defined standards under varying loads.

- Create and edit scripts using the SQABasic and VU scripting environments. The Robot editor provides color-coded commands with keyword Help for powerful integrated programming during script development. (VU scripting is used with sessions in performance testing.)

- Test applications developed with IDEs such as Java, HTML, Visual Basic, Oracle Forms, Delphi, and PowerBuilder. You can test objects even if they are not visible in the application’s interface.

- Collect diagnostic information about an application during script playback. Robot is integrated with Rational Purify, Rational Quantify, and Rational PureCoverage. You can play back scripts under a diagnostic tool and see the results in the log.

The Object-Oriented Recording technology in Robot lets you generate scripts by simply running and using the application-under-test. Robot uses Object-Oriented Recording to identify objects by their internal object names, not by screen coordinates. If objects change locations or their text changes, Robot still finds them on playback.

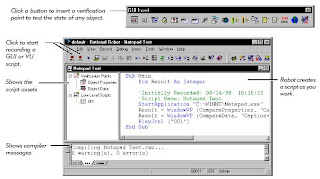

The following figure shows the main Robot window after you have recorded a script.

The Object Testing technology in Robot lets you test any object in the application-under-test, including the object’s properties and data. You can test standard Windows objects and IDE-specific objects, whether they are visible in the interface or hidden.

Introduction to Rational Robot

Rational Robot is a complete set of components for automating the testing of Microsoft Windows client/server and Internet applications running under Windows NT 4.0, Windows XP, Windows 2000, and Windows 98.

- Rational Administrator – Use to create and manage Rational projects, which store your testing information.

- Rational TestManager Log – Use to review and analyze test results.

- Object Properties, Text, Grid, and Image Comparators – Use to view and analyze the results of verification point playback.

- Rational SiteCheck – Use to manage Internet and intranet Web sites.

Managing Rational Projects with the Administrator

You use the Rational. Administrator to create and manage projects.

Rational projects store application testing information, such as scripts, verification points, queries, and defects. Each project consists of a database and several directories of files. All Rational Test components on your computer update and retrieve data from the same active project.

Projects help you organize your testing information and resources for easy tracking. Projects are created in the Rational Administrator, usually by someone with administrator privileges.

Use the Administrator to:

- Create a project under configuration management.

- Create a project outside of configuration management.

- Connect to a project.

- See projects that are not on your machine (register a project).

- Delete a project.

- Create and manage users and groups for a Rational Test datastore.

- Create and manage projects containing Rational RequisitePro. projects and Rational Rose. models.

- Manage security privileges for the entire Rational project.

- Create a test datastore using SQL Anywhere.

- Convert an existing Microsoft Access test datastore to a SQL Anywhere test datastore.

The following figure shows the main Rational Administrator window after you have created some projects:

Summary

When you create a test datastore, the Rational Administrator uses Microsoft Access for the default database engine. However, if more than one user will access the test datastore simultaneously, use Sybase SQL Anywhere for the database engine. To create a test datastore using SQL Anywhere software, click Advanced Database Setup in the Create Test Datastore dialog box. See the Rational Administrator online Help for more information.

You must install Sybase SQL Anywhere software and create a SQL Anywhere database server before you create a new SQL Anywhere test datastore or convert an existing Microsoft Access test datastore to a SQL Anywhere test datastore.

Wednesday, May 9, 2007

SilkTest and WinRunner Feature Descriptions Continued...

Both tools provide proprietary, interpreted, scripting languages. Each language provide the usual flow control constructs, arithmetic and logical operators, and a variety of built-in libraryfunctions to perform such activities as string manipulation, [limited] regular expression support,standard input and output, etc. But that is where the similarity ends:

• SilkTest provides a strongly typed, object-oriented programming language called 4Test.Variables and constants may be one of 19 built-in data types, along with a user definedrecord data type. 4Test supports single- and multi-dimensional dynamic arrays and lists,which can be initialized statically or dynamically. Exception handling is built into thelanguage [via the do… except statement].

• WinRunner provides a non-typed, C-like procedural programming language called TSL.Variables and constants are either numbers or strings [conversion between these two typesoccurs dynamically, depending on usage]. There is no user defined data type such as a recordor a data structure. TSL supports sparsely populated associative single- and [pseudo] multidimensionarrays, which can be initialized statically or dynamically—element access isalways done using string references—foobar[“1”] and foobar[1] access the sameelement [as the second access is implicitly converted to an associative string indexreference]. Exception handling is not built into the language.The only way to truly understand and appreciate the differences between these two programmingenvironments is to use and experiment with both of them.

Exception Handling

Both tools provide the ability to handle unexpected events and errors, referred to as exceptions,but implement this feature differently:

• SilkTest’s exception handling is built into the 4Test language—using the do… exceptconstruct you can handle the exception locally, instead of [implicitly] using SilkTest’sdefault built-in exception handler [which terminates the currently running test and logs anerror]. If an exception is raised within the do block of the statement control is thenimmediately passed to the except block of code. A variety of built-in functions[LogError(), LogWarning, ExceptNum(), ExceptLog(), etc.] and 4Test statements[raise, reraise, etc.] aid in the processing of trapped exceptions within the except blockof code.

• WinRunner’s exception handling is built around

(1) defining an exception based on the typeof exception (Popup, TSL, or object) and relevant characteristics about the exeception (mostoften its error number);

(2) writing an exception hander, and

(3) enabling and disabling thatexception handler at appropriate point(s) in the code. These tasks can be achieved by handcoding or through the use of the Exception Handling visual recorder.

Test Results Analysis

• SilkTest’s test results file resolves around the test run. For example if you run 3 testcases[via a test suite or SilkOrganizer] all of the information for that test run will be stored in asingle results file. There is a viewer to analyze the results of the last test run or X previous tothe last run. Errors captured in the results file contain a full stack trace to the failing line ofcode, which can be brought up in the editor by double-clicking on any line in that stack trace.

• WinRunner’s test results file revolves around each testcase. For example if you run 3testcases [by hand or via a batch test or TestDirector] 3 test results files are created, each in asubdirectory under its associated testcase. There is a viewer to analyze the results of a test’slast run or if results have not been deleted, a previous run. Double clicking on events in thelog often expands that entry’s information, sometimes bringing up specialized viewers [forexample when that event is some type of checkpoint or some type of failure].

Managing the Testing Process

• SilkTest has a built-in facility, SilkOrganizer, for creating a testplan and then linking thetestplan to testcases. SilkOrganizer can also be used to track the automation process andcontrol the execution of selected groups of testcases. One or more user defined attributes[such as “Test Category”, “Author”, “Module”, etc.] are assigned to each testcase and thenlater used in testplan queries to select a group of tests for execution. There is also a modestcapability to add manual test placeholders in the testplan, and then manually add pass/failstatus to the results of a full test run. SilkTest also supports a test suite, which is a filecontaining calls to one or more test scripts or other test suites.

• WinRunner integrates with a separate program called TestDirector [at a substantialadditional cost], for visually creating a test project and then linking WinRunner testcases intothat project. TestDirector is a database repository based application that provides a variety oftools to analyze and manipulate the various database tables and test results stored in therepository for the project. A bug reporting and tracking tool is included with TestDirector aswell [and this bug tracking tool supports a browser based client].Using a visual recorder, testcases are added to one or more test sets [such as “TestCategory”, “Author”, “Module”, etc.] for execution as a group. There is a robust capabilityfor authoring manual test cases [i.e. describing of each test step and its expected results],interactively executing each manual test, and then saving the pass/fail status for each teststep in the repository. TestDirector also allows test sets to be scheduled for execution at atime and date in the future, as well as executing tests remotely on other machines [this lastcapability requires the Enterprise version of TestDirector].TestDirector is also capable of interfacing with and executing LoadRunner test scripts aswell as other 3rd party test scripts [but this later capability requires custom programming viaTestDirector APIs]. Additionally TestDirector provides API’s to allow WinRunner as well asother 3rd party test tools [and programming environments] to interface with a TestDirectordatabase.

External Files

When the tool’s source code files are managed with a code control tool such as PVCS or VisualSourceSafe, it is useful to understand what external side files are created:

• SilkTest implicitly creates *.*o bytecode-like executables after interpreting the source codecontained in testcases and include files [but it unlikely that most people will want to sourcecode control these files]. No side files are created in the course of using its recorders.SilkTest does though create an explicit *.bmp files for storing the expected and actualcaptured bitmap images when performing a bitmap verification.• WinRunner implicitly creates many side files using a variety of extensions [*.eve, *.hdr,*.asc, *.ckl, *.chk, and a few others] in a variety of implicitly created subdirectories[/db, /exp, /chklist, /resX] under the testcase in the course of using its visual recordersas well as storing pass/fail results at runtime.

Debugging

Both tools support a visual debugger with the typical capabilities of breakpoints, single step, runto, step into, set out of, etc.

SilkTest And WinRunner Feature Description Continued....

• SilkTest supports a true object-oriented hierarchy of parent-child-grandchild-etc.relationships between windows and objects within windows. In this model an object such asa menu is the child of its enclosing window and a parent to its menu item objects.

• WinRunner, with some rare exceptions [often nested tables on web pages], has a flat objecthierarchy where child objects exist in parent windows. Note that web page frames are treatedas windows, and not child objects of the enclosing window on web pages that are constructedusing frames.

Object Recognition

Both of these tools use a lookup table mechanism to isolate the variable name used to referencean object in a test script from the description used by the operating system to access that object atruntime:

• SilkTest normally places an application’s GUI declarations in a test frame file. There isgenerally one GUI declaration for each window and each object in a window. A GUI declaration consists of an object identifier—the variable used in a test script—and its class and object tag definition used by the operating system to access that object at runtime.SilkTest provides the following capabilities to define an object tag:

(1) a string, which caninclude wildcards;

(2) an array reference which resolves to a string which can includewildcards;

(3) a function or method call that returns a string, which can include wildcards,

(4) an object class and class relative index number; and

(5) multiple tags [multi-tags] eachoptionally conditioned with

(6) an OS/GUI/browser specifier [a qualification label].

• WinRunner normally places an application’s logical name/physical descriptor definitions in a GUI Map file. There is generally one logical name/physical descriptor definition for eachwindow and each object in a window. The logical name is used to reference the object in atest script, while the physical descriptor is used by the operating system to access that objectat runtime.WinRunner provides the following capabilities to define a physical descriptor:

(1) a variablenumber of comma delimited strings which can include wildcards, where each stringidentifies one property of the object. [While there is only a single method of defining a physical descriptor, this definition can include a wide range and variable number ofobligatory, optional, and selector properties on an object by object basis].The notion behind this lookup table mechanism is to permit changes to an object tag [SilkTest]or a physical descriptor [WinRunner] definition without the need to change the associatedidentifier [SilkTest] or logical name [WinRunner] used in the testcase.In general the object tag [SilkTest] or physical descriptor [WinRunner] resolve to one or moreproperty definitions which uniquely identify the object in the context of its enclosing parentwindow at runtime.It is also possible with both tools to dynamically construct and use object tags [SilkTest] orphysical descriptors [WinRunner] at runtime to reference objects in test scripts.

Object Verification

Both tools provide a variety of built-in library functions permitting a user to hand code simple verification of a single object property [i.e. is/is not focused, is/is not enabled, has/does not have an expected text value, etc.]. Complex multiple properties in a single object and multiple object verifications are supported using visual recorders:

• SilkTest provides a Verify Window recorder which allows any combination of objects and object properties in the currently displayed window to be selected and captured. Using this tool results in the creation, within the testcase, of a VerifyProperties() method call against the captured window.

• WinRunner provides several GUI Checkpoint recorders to validate

(1) a single object property,

(2) multiple properties in a single object, and

(3) multiple properties of multiple objects in a window. The single property recorder places a verification statement directly inthe test code while the multiple property recorders create unique checklist [*.ckl] files inthe /chklists subdirectory [which describe the objects and properties to capture], as wellas an associated expected results [*.chk] file in the /exp subdirectory [which contains theexpected value of each object and property defined in the checklist file].Both tools offer advanced features to define new verification properties [using mapping techniques and/or built-in functions and/or external DLL functions] and/or to customize howexisting properties are captured for standard objects.

Custom Objects

Note: The description of this feature, more than any other in this report, is limited in its scope and coverage. An entire white paper could be dedicated to exploring and describing how each ofthese tools deal with custom objects. Therefore dedicate several days to evaluating how eachof these tools accommodate custom objects in your specific applications.To deal with a custom object [i.e. an object that does not map to standard class] both toolssupport the use of class mapping [i.e. mapping a custom class to a standard class with like functionality], along with a variety of X:Y pixel coordinate clicking techniques [some screen absolute, some object relative] to deal with bitmap objects, as well as the ability to use external DLL functions to assist in object identification and verification. Beyond these shared capabilitieseach tool has the following unique custom object capabilities:

• SilkTest has a feature to overlay a logical grid of X rows by Y columns on a graphic that has evenly spaced “hot spots”[this grid definition is then used to define logical GUI declarationsfor each hot spot]. These X:Y row/column coordinates are resolution independent [i.e. thelogical reference says “give me 4th column thing in the 2nd row”, where that grid expands orcontracts depending on screen resolution].

• WinRunner has a built-in text recognition engine which works with most standard fonts.This capability can often be used to extract visible text from custom objects, position thecursor over a custom object, etc. This engine can also be taught non-standard font typeswhich is does understand out of the box.Both tools offer support for testing non-graphical controls through the advanced use of customDLLs [developed by the user], or the Extension Kit [SilkTest, which may have to be purchased atan addition cost] and the Mercury API Function Library [WinRunner].

Internationalization (Language Localization)

• SilkTest supports the single-byte IBM extended ASCII character set, and its TechnicalSupport has also indicated “that Segue has no commitment for unicode”. The user guidechapter titled “Supporting Internationalized Applications” shows a straightforward techniquefor supporting X number of [single-byte IBM extended ASCII character set] languages in asingle test frame of GUI declarations.

• WinRunner provides no documentation on how to use the product to test language localizedapplications. Technical Support has indicated that

(1) “WinRunner supports multi-bytecharacter sets for language localized testing…”,

(2) “there is currently no commitment forthe unicode character set…”, and

(3) “it is possible to convert a US English GUI Map toanother language using a [user developed] phrase dictionary and various gui_* built-infunctions”.Check into the aspects of this feature very carefully if it is important to your testing effort.

Database Interfaces

Both tools provide a variety of built-in functions to perform Structure Query Language (SQL)queries to control, alter, and extract information from any database which supports the OpenDatabase Connectivity (ODBC) interface.

Database Verification

Both tools provide a variety of built-in functions to make SQL queries to extract informationfrom an ODBC compliant database and save it to a variable [or if you wish, an external file].Verification at this level is done with hand coding.WinRunner also provides a visual recorder to create a Database Checkpoint used to validate theselected contents of an ODBC compliant database within a testcase. This recorder creates sidefiles similar to GUI Checkpoints and has a built-in interface to

(1) the Microsoft Query application [which can be installed as part of Microsoft Office], and

(2) to the Data Junctionapplication [which may have to be purchased at an addition cost], to assist in constructing andsaving complex database queries.

Data Driven Testing

Both tools support the notion of data-driven tests, but implement this feature differently:

• SilkTest’s implementation is built around an array of user defined records. A record is adata structure defining an arbitrary number of data items which are populated with valueswhen the array is initialized [statically or at runtime]. Non-programmers can think of anarray of records as a memory resident spreadsheet of X number of rows which contain Ynumber columns where each row/column intersection contains a data item.The test code, as well as the array itself, must be hand coded. It is also possible to populatethe array each time the test is run by extracting the array’s data values from an ODBC compliant database, using a series of built-in SQL function calls. The test then iteratesthrough the array such that each iteration of the test uses the data items from the next recordin the array to drive the test or provide expected data values.

• WinRunner’s implementation is built around an Excel compatible spreadsheet file of Xnumber of rows which contain Y number of columns where each row/column intersectioncontains a data item. This spreadsheet is referred to as a Data Table.The test code, as well as the Data Table itself, can be created with hand coding or the use ofthe DataDriver visual recorder. It is also possible to populate a Data Table file each time thetest is run by extracting the table’s data values from an ODBC compliant database using aWinRunner wizard interfaced to the Microsoft Query application.. The test then iteratesthrough the Data Table such that each iteration of the test uses the data items from the nextrow in the table to drive the test or provide expected data values.Both tools also support the capability to pass data values into a testcase for a more modestapproach to data driving a test.

Restoring an Application’s Initial State

• SilkTest provides a built-in recovery system which restores the application to a stable state,referred to as the basestate, when the test or application under test fails ungracefully. Thedefault basestate is defined to be: (1) the application under test is running;

(2) the applicationis not minimized; and

(3) only the application’s main window is open and active. There aremany built-in functions and features which allow the test engineer to modify, extend, andcustomize the recovery system to meet the needs of each application under test.

• WinRunner does not provide a built-in recovery system. You need to code routines to returnthe application under test to its basestate—and dismiss all orphaned dialogs—when a testfails ungracefully.

Tuesday, May 8, 2007

Silktest And WinRunner Feature Descriptions

For the sake of consistency alphabetical ordering was selected to describe SilkTest features first,followed by WinRunner features in each of the following sections.

Startup Initialization and Configuration

• SilkTest derives its initial startup configuration settings from its partner.ini file. Thisthough is not important because SilkTest can be reconfigured at any point in the session byeither changing any setting in the Options menu or loading an Option Set.An Option Set file (*.opt) permits customized configuration settings to be established foreach test project. The project specific Option Set is then be loaded [either interactively, orunder program control] prior to the execution of the project’s testcases.The Options menu or an Option Set can also be used to load an include file (*.inc)containing the project’s GUI Declarations along with any number of other include files containing library functions, methods, and variables sharedby all testcases.

• WinRunner derives its initial startup configuration from a wrun.ini file of settings.During startup the user is normally polled [this can be disabled] for the type of addins theywant to use during the session .The default wrun.ini file is used when starting WinRunner directly, while project specificinitializations can be established by creating desktop shortcuts which reference a projectspecific wrun.ini file. The use of customized wrun.ini files is important because once WinRunner is started with a selected set of addins you must terminate WinRunner and restart it to use a different set of addins.The startup implementation supports the notion of a startup test which can be executedduring WinRunner initialization. This allows project-specific compiled modules [memoryresident libraries] and GUI Maps to be loaded. The functions and variables contained in these modules can then be used by all tests that are run during that WinRunner session.Both tools allow most of the configuration setup established in these files to be over-ridden withruntime code in library functions or the test scripts.

Test Termination

• SilkTest tests terminate on exceptions which are not explicitly trapped in the testcase.

For example if a window fails to appear during the setup phase of testing [i.e. the phase drivingthe application to a verification point], a test would terminate on the first object or windowtimeout exception that is thrown after the errant window fails to appear.

• WinRunner tests run to termination [in unattended Batch mode] unless an explicit action is taken to terminate the test early. Therefore tests which ignore this termination model will continue running for long periods of time after a fatal error is encountered. For example if awindow fails to appear during the setup phase of testing, subsequent context sensitive statements [i.e. clicking on a button, performing a menu pick, etc.] will fail—but this failure occurs after a multi-second object/window “is not present” timeout expires for each missingwindow and object. [When executing tests in non-Batch mode, that is in Debug, Verify, orUpdate modes, WinRunner normally presents an interactive dialog box when implicit errorssuch as missing objects and windows are encountered].

Addins and ExtensionsOut of the box, under Windows, both tools can interrogate and work with objects and windowscreated with the standard Microsoft Foundation Class (MFC) library. Objects and windows created using a non-MFC technology [or non-standard class naming conventions] are treated ascustom objects. But objects and windows created for web applications [i.e. applications which run in a browser],Java applications, Visual Basic applications, and PowerBuilder applications are dealt with in aspecial manner:

• SilkTest enables support for these technologies using optional extensions. Selectedextensions are enabled/disabled in the current Option Set [or the configuration established bythe default partner.ini option settings].

• WinRunner enables support for these technologies using optional addins. Selected addins are enabled/disabled using either the Addin Manager at WinRunner startup, or by editing the appropriate wrun.ini file prior to startup.

Note that (1) some combinations of addins [WinRunner] and extensions [SilkTest] are mutuallyexclusive, (2) some of these addins/extensions may no longer be supported in the newest releasesof the tool, (3) some of these addins/extensions may only support the last one or two releases ofthe technology [for example version 5 and 6 of Visual Basic] and (4) some of these addins andextensions may have to be purchased at an addition cost.

Visual Recorders

SilkTest provides visual recorders and wizards for the following activities:

• Creating a test frame with GUI declarations for a full application and adding/deleting selective objects and windows in and existing GUI declarations frame file.

• Capturing user actions with the application into a test case, using either context sensitive[object relative] or analog [X:Y screen coordinate relative] recording techniques.

• Inspecting identifiers, locations and physical tags of windows and objects.

• Checking window and object bitmaps [or parts thereof].

• Creating a verification statement [validating one or more object properties].WinRunner provides visual recorders and wizards for the following activities:

• Creating an entire GUI Map for a full application and adding/deleting selective objects andwindows in an existing GUI Map. It is also possible to implicitly create GUI Map entries bycapturing user actions [using the recorder described next].

• Capturing user actions with the application into a test case, using either context sensitive[object relative] or analog [X:Y screen coordinate relative] recording techniques.

• Inspecting logical names, locations and physical descriptions of windows and objects.• Checking window and object bitmaps [or parts thereof].

• Creating a GUI checkpoint [validating one or more object properties].

• Creating a database checkpoint [validating information in a database].

• Creating a database query [extracting information from a database].

• Locating at runtime a missing object referenced in a testcase [and then adding that object tothe GUI Map].

• Teaching WinRunner to recognize a virtual object [a bitmap graphic with functionality].

• Creating Data Tables [used to drive a test from data stored in an Excel-like spreadsheet].• Checking text on a non-text object [using a built-in character recognition capability].

• Creating a synchronization point in a testcase.

• Defining an exception handler.Some of these recorders and wizards do not work completely for either tool against allapplications, under all conditions. For example neither tool’s recorder to create a full GUI Map[WinRunner] or test frame [SilkTest] works against large applications, or any web application.Evaluate the recorders and wizards of interest carefully against your applications if these utilitiesare important to your automated testing efforts.

Friday, May 4, 2007

Selecting the best defect tracking system

System Architecture:

Many old bug or defect tracking systems are client server based. You need install the server, and each user need install the client software. If external users were involved, it could be problematic because of issues like firewall etc. Also, it is not always feasible to install client software.

Newer systems are more likely web browser based and no client software need to be installed (except a browser). A web-based bug tracking system is especially attractive if your users are located in different locations and are connected through the internet.

For a web-based bug or defect tracking system, make sure it supports the browsers your users are using. Be aware that many systems support only IE.

Server Operating System:

Most commercial bug tracking systems are Windows based. In such a case, it is likely that it requires an NT/2000/XP server and a SQL Server database. Note that, a Windows XP Professional may not be sufficient, instead, a server may be required.

Most free bug or defect tracking systems are Linux/Unix based, and may not work as well on Windows. It may also require more technical skills to install and setup the system.

When people say their system is cross-platform, you need make sure they meant the server. Only a very few bug tracking systems are really cross-platform (with the same code base). Some vendors claim to support multiple OS, but they have completely independent versions for each OS and that results in higher costs for the vendor and therefore higher price for the end users.

Backend Database:

Most bug or defect tracking systems require a backend database, but a few are file based. In the latter case, make sure it scales well. If someone tells you that a file based system is better than a database, think twice.

For Windows based systems, database selection may be limited to only Access and SQL Server. On the other hand, some free systems may lock you into just one database, notably MySQL. Only a very few bug tracking systems are really cross database systems.

Be aware of any bug tracking software that uses non-standard proprietary databases. They cannot be better than the public, commonly used database systems.

Language Support:

Many bug tracking systems do not support localization, particually, Asian langauges. Note that, it involves the web interface, the data, and the email notification.

If you do need localization, you should find a system that can do that easily.

Web Server:

For Windows based bug tracking systems, most likely it requires IIS as the web server.

For Java-based bug tracking systems, a Servlet or J2EE server is most likely required. There are many high quality servers you can download for free.

Programming Language:

Most of the bug tracking systems are written in either c/c++, or perl/php, or Java.

Depending on your IT environment and skill set, the programming language may be relevant in selecting your system. For example, if you are developing Java software, it may make sense to use a Java based bug tracking system.

Version Control Integration:

Some bug tracking systems have the capability of integrating with source control systems such as CVS, Source Safe, etc.

Be aware of the limitations, and make sure it does the things you want.

Installation and Configuration:

A bug tracking system is not a desktop application and it rarely works out-of-the-box. It is not uncommon to spend a few hours to setup such a system, and then more time to customize it.

However, if you need only a lightweight bug tracking system, a heavy, complex, can-do-everything system is certainly a over kill and it may do more harm than good.

Maintenance and Support:

A bug tracking tool is not a super complex software system, but from time to time you may need technical support. As you certainly know, in most cases, the error messages from these systems are always cryptic, and you won't be able to solve the problem on your own.

How is the error handled in a tool is far more important than you might think. You as the administrator may want select a tool that you feel comfortable to work with.

When support is needed, it is always urgent to you, but not necessary to the vendor. Before you purchase the software, you should ask what is the response time for support.

Features:

Simple is the key here. The system must be simple that people like to use it, but not so complex that people avoid to use it. You might not want to deploy a tool that requires serious end user training. It is really not the initial training, rather the on-going support needed from your end users that you should be concerned with.

Yet it should be flexible and configurable enough to satisfy your business needs. If you select a tool that cannot do whatever you intend it to do, then what is the use of it?

Cost of Ownship:

The initial cost of a bug tracking system varies from free to tens of thousands of dollars. But be aware that this is not the same as the total cost of the ownership. Some free systems charge a hefty consulting fee for support and you may end up paying much more than you planned.

You should select a bug tracking system based on your needs, not just the price. If you know what you are doing and do not need commercial support, go for a free one if it meets your requirement.

However, if you unfortunately selected a bad one, you better get out of it as soon as possible, because the longer you keep it, the more moeny and time you will have to spend on it.

In any case, spending many days to setup a free system or even weeks or months to create an in-house system makes no business and economic sense, because if you consider the time spent, you are actually paying much more than just buying one.

WinRunner Tips

The 5 major areas to know for WinRunner are listed below with SOME of the subtopics called out for each of the major topics:

1) GUI Map

- Learning objects

- Mapping custom objects to standard objects

2) Record/Playback

- Record modes: Context Sensitive and Analog

- Playback modes: (Batch), Verify, Update, Debug

3) Synchronization

- Using wait parameter of functions

- Wait window/object info

- Wait Bitmap

- Hard wait()

4) Verification/Checkpoints

- window/object GUI checkpoints

- Bitmap checkpoints

- Text checkpoints (requires TLS)

5) TLS (Test Script Language)

- To enhance scripts (flow control, parameterization, data driven test, user defined functions,...

Calling Scripts and Expected Results

When running in non-batch mode, WinRunner will always look in the calling scripts \exp directory for the checks. When running in batch mode, WinRunner will look in the called script's \exp directory.

There is a limitation, though. WinRunner will only look in the called script's \exp directory one call level deep. For example, in bacth mode: script1:

gui_check(...); #will look in script1\exp

call "script2" ();

script2:

gui_check(...); #will look in script2\exp

call "script3" ();

script3:

gui_check(...); #will look in script2\exp (and cause an error)

In non bacth mode:

script1:

gui_check(...); #will look in script1\exp

call "script2" ();

script2:

gui_check(...); #will look in script1\exp (and cause an error)

call "script3" ();

script3:

gui_check(...); #will look in script1\exp (and cause an error)

Run Modes

Batch mode will write results to the individual called test.

Interactive (non-batch) mode writes to the main test.

Data Types

TSL supports two data types: numbers and strings, and you do not have to declare them. Look at the on-line help topic for some things to be aware of:

"TSL Language", "Variables and Constants", "Type (of variable or constant)"

Generally, you shouldn't see any problems with comparisons.

However, if you perform arithmetic operations you might see some unexpected behavior (again check out the on-line help mentioned above). var="3abc4";rc=var + 2; # rc will be 5 :-)

Debugging

When using pause(x); for debugging, wrap the variable with brackets to easily see if "invisible" characters are stored in the variable (i.e., \n, \t, space, or Null) pause("[" & x & "]");

Use the debugging features of WinRunner to watch variables. "invisible" characters will show themselves (i.e., \n, \t, space) Examples:

Variable

pause(x);

pause("[" & x & "]");

x="a1";

a1

[a1]

x="a1 ";

a1

[a1 ]

x="a1\t";

a1

[a1 ]

x="a1\n";

a1

[a1]

x="";

[]

Block Comments

To temporarily comment out a block of code use: if (TRUE) { ... block of code to be commented out!! }

Data Driven Test ddt_* functions vs getline/split

These bothfunctions do almost the same thing. There are some agruably good benefits to using ddt_* , but most of them are focused on the data management. In general you can always keep the data in Excel and perform a Save As to convert the file to a delimited text file.

One major difference is in the performance of playing back a script that has a huge data file. The ddt_* functions currently can not compare to the much faster getline/split method.

But here is an area to consider: READABILITY I personally do not like scripts with too many nested function calls (which the parameterize value method does) because it may reduce the readability for people with out a programming background. Example:

edit_set("FirstName", ddt_val(table, "FirstName"));

edit_set("LastName", ddt_val(table, "LastName"));

So what I typically do is, declare my own variables at the beginning of the script, assign the values to them, and use the variable names in the rest of the script. It doesn't matter if I'm using the getline/split or ddt_val functions. This also is very useful when I may need to change the value of a variable, because they are all initialized at the top of the script (whenever possible). Example with ddt_* functions in a script:

FIRSTNAME=ddt_val(table, "FirstName");

LASTNAME=ddt_val(table, "LastName");

...

edit_set("FirstName", FIRSTNAME);

edit_set("LastName", LASTNAME);

And most of the time I have a driving test which calls another test and passes an array of data to be used to update a form. Example with ddt_* functions before calling another

script:

# Driver script will have

...

MyPersonArray [ ] =

{

"FIRSTNAME" = ddt_val(table, "FirstName");

"LASTNAME" = ddt_val(table, "LastName");

}

call AddPerson(MyPersonArray)

...

# Called script will have

edit_set("FirstName", Person["FIRSTNAME"]);

edit_set("LastName", Person["LASTNAME"]);

So as you can see, there are many ways to do the same thing. What people must keep in mind is the skill level of the people that may inherit the scripts after they are created. And a consistent method should be used throughout the project.

String Vs Number Comparison

String Vs Number comparisons are not a good thing to do.

Try this sample to see why:

c1=47.88 * 6;

c2="287.28";

#Prints a decimal value while suppressing non-significant zeros

#and converts the float to a string.

c3 = sprintf ("%g", c1);

pause ("c1 = [" & c1 & "]\nc2 = [" & c2 & "]\nc3 = [" & c3 & "]\n" & "c1 - c2 =

[" & c1 - c2 & "]\nc1 - c3 = [" & c1 - c3 & "]\nc2 - c3 = [" & c2 - c3 & "]");